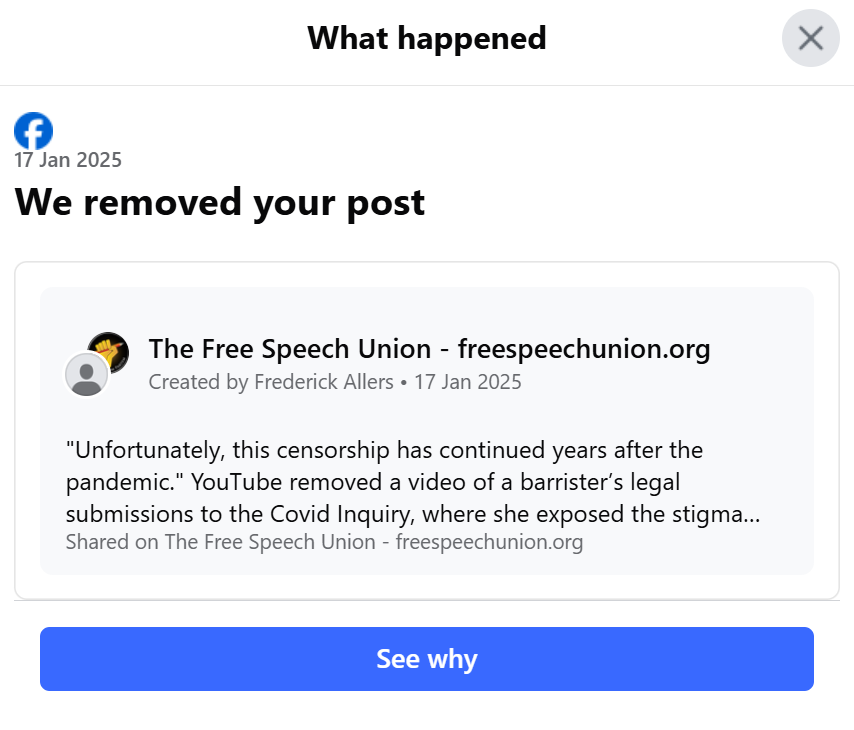

LAST FRIDAY, Facebook removed a Free Speech Union (FSU) post about YouTube’s deletion of a video about vaccine harms. So much for Mark Zuckerberg’s fancy talk about ‘free speech’.

The post, which highlighted the stigma and, ironically, censorship faced by those injured by the covid vaccines, was of course entirely lawful. Indeed, it was made by a human rights lawyer who is giving evidence to the covid inquiry. Its removal raises questions about the extent to which the platform’s pivot to a ‘Community Notes’ system (as part of a broader, sector-wide turn towards user-based verification systems) is quite the historic moment for online free speech that some observers claim.

On January 7, Mark Zuckerberg announced that Meta would discontinue its internal fact-checking programme in favor of a user-based Community Notes system, mirroring the approach taken by Elon Musk’s X.

‘Fact-checkers have just been too politically biased and have destroyed more trust than they have created,’ Zuckerberg said, adding that Facebook would ‘get rid of a bunch of restrictions’ on topics including gender and immigration amid a wider corporate backlash against diversity and inclusion initiatives.

‘What started as a movement to be more inclusive has increasingly been used to shut down opinions,’ he added.

Meta plans to begin rolling out its Community Notes system over the next few months, allowing users to decide whether posts are false or misleading. Although specific dates for a global rollout have not been disclosed, the company intends to evaluate the system’s effectiveness domestically before considering expansion to other regions.

The announcement led some to commend Zuckerberg’s commitment to free speech. While he touts Community Notes as a human-centred move toward greater expression, Facebook’s ongoing reliance on ‘back-end’ machine-learning-based algorithms (which we think was responsible for the removal of our post) casts doubt on the platform’s commitment to reform. The question remains: will this change address the broader issues with Meta’s automated content moderation systems?

To be clear, this isn’t the first time Facebook has removed one of our posts. This has never been because what we posted was false or misleading – or illegal, obviously. In almost every case, it’s because the subject of the post was a hot-button issue, such as the clash between trans rights and women’s sex-based rights, the application of critical race theory in public life, the adequacy of public health responses to the Covid pandemic, and the push to implement Net Zero policies.

This highlights a critical issue, that perfectly lawful views and opinions are being routinely censored by Facebook due to vague, subjective definitions of ‘hate speech’ and ‘misinformation’.

The problem is that, while Community Notes empowers users to provide context and flag potentially misleading information, it focuses primarily on evaluating content accuracy. Meanwhile, Facebook’s automated moderation systems continue to operate in the background, identifying and managing content flagged as violating the platform’s policies. These AI-driven systems are unlikely to be phased out, not least because of their central role in ensuring compliance with regulations such as the EU’s Digital Services Act (DSA). The DSA mandates that large platforms swiftly address illegal content and mitigate systemic risks, making automated moderation both a legal and practical necessity.

In other words, Community Notes may well be a nice-sounding, decentralised system that’s suggestive of a move towards free speech. The reality, as evidenced by the removal of our post, is that the shadowy algorithms hidden behind this public-facing veneer – still crunching away and driving Meta’s moderation policies – remain deeply problematic for anyone hoping that Big Tech in the Trump 2.0 era will uphold freedom of expression.